"Human-Like" Music Generation

Key Takeaways

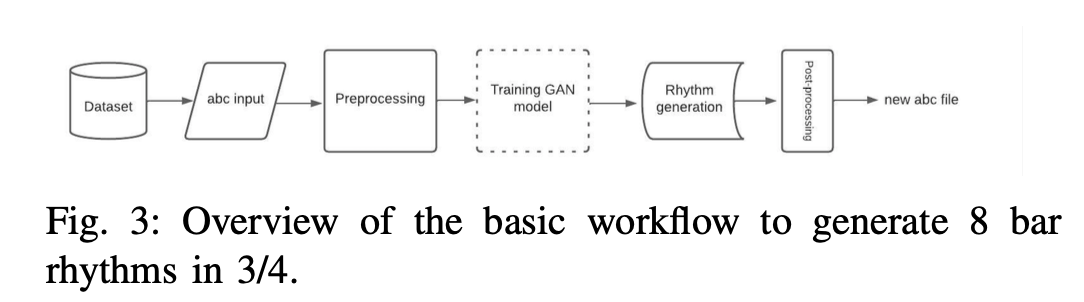

For the course Digital Musicology at EPFL me and my team we developed three models for generating respectively human-like rhythm, melody and musical piece. These works were based on a corpus of Swedish music. In each of the three laboratories we defined:

1 - evaluation metric

2 - feature encoding

3 - model definition

4 - training phase

5 - output generation

5 - results analysis

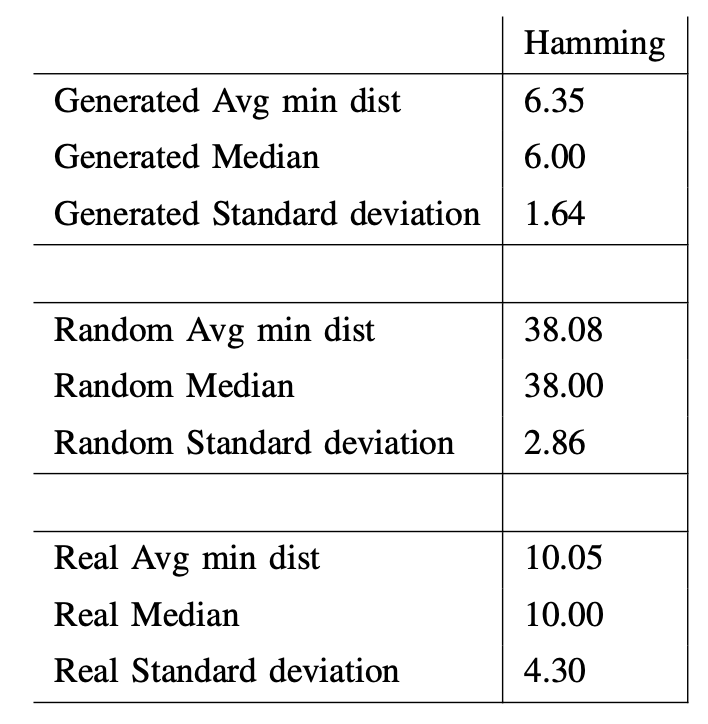

Distance metric: Minimum Hamming distance on a vectorized version of the rhythmical data. More precisely, each output generated is compared to each vectorized rhythm originating from the dataset and the minimum of those distances is taken.

Evaluation metric: mean, median, and standard deviation of the evaluation metric for the original corpus, random music generation, and GAN-based music generation.

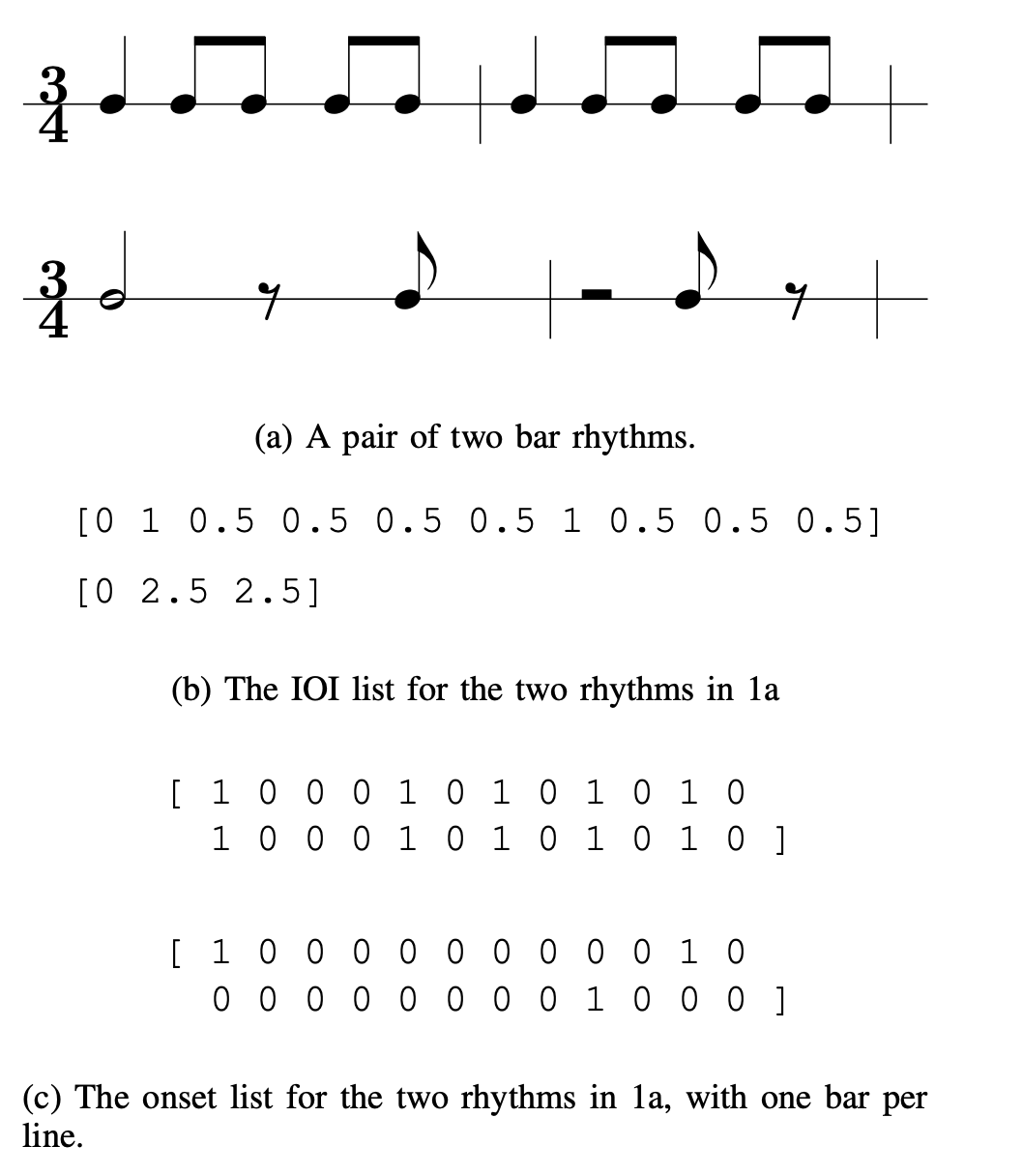

To encode the rhythm we parsed .abc encoded music files using music21 to obtain IOIs, encoding them as 0s and 1s, with each value representing a 16th-note division.

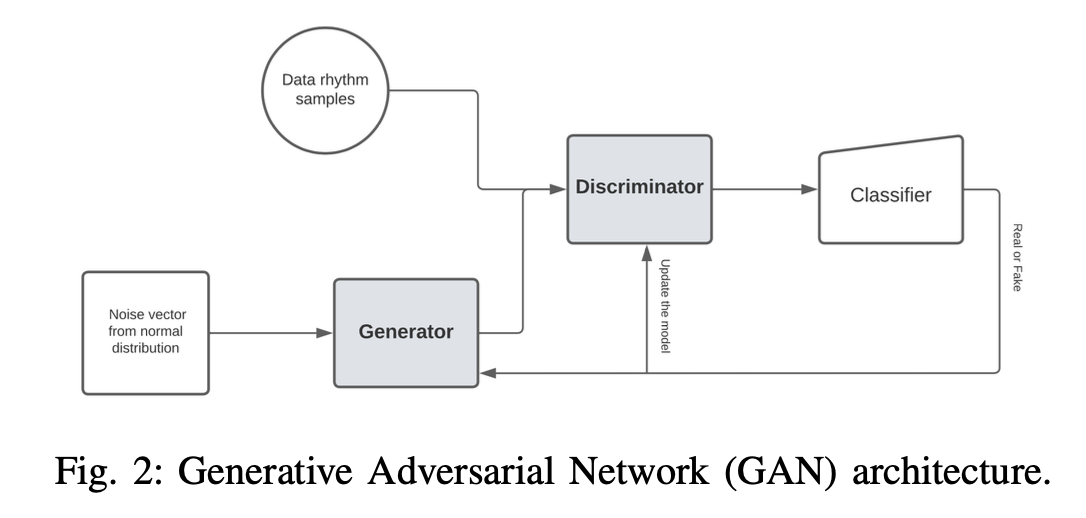

In this case, we used a Generative Adversarial Network (GAN) model.

Here are our results:

It's promising that the average min distance for the GAN model is much closer to the real dataset distance than the random model. However, the SD of our model is far lower compared to the real dataset, this could mean that our results are all quite similar to each other.

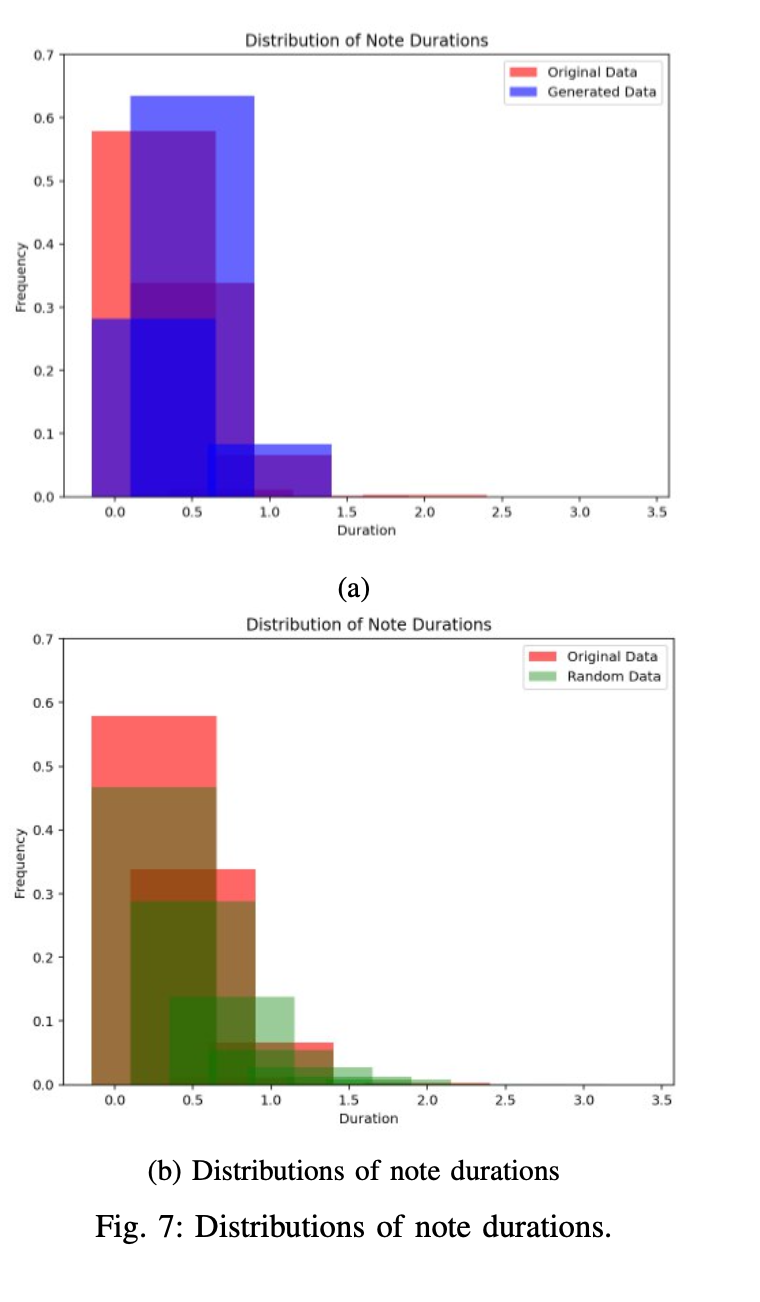

We can observe that in general our model has the same distribution of notes duration, even if there is a tendency towards producing more 1/8th notes, compared to the original dataset where there are more 1/16th notes.

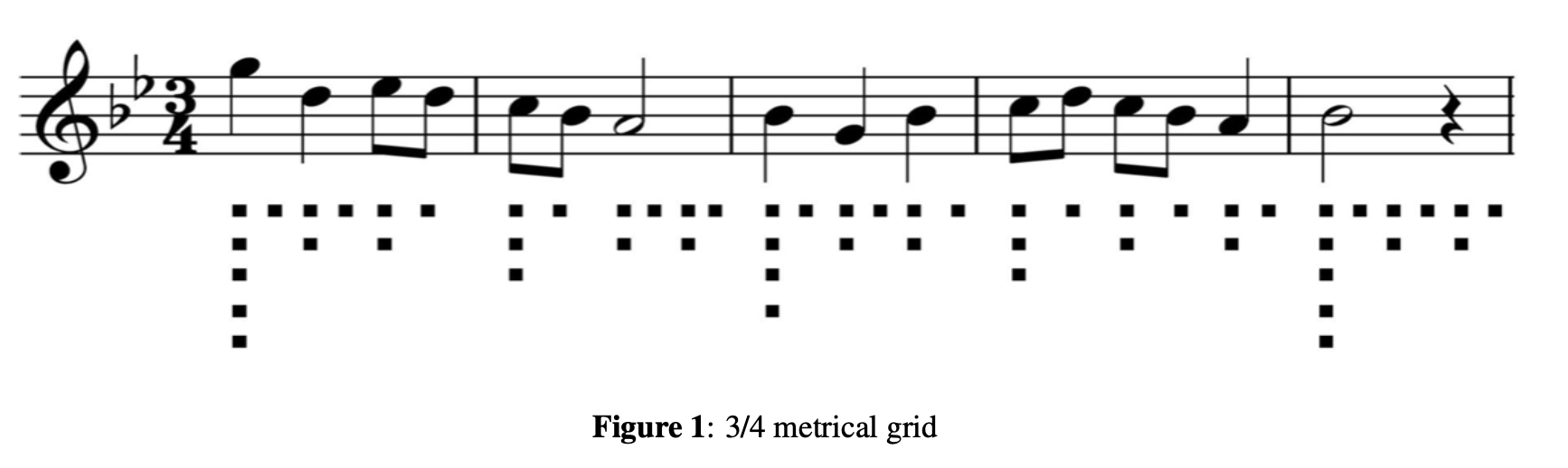

Our model for melody generation adopts a hierarchical Markov chain approach, incorporating multiple Markov chains, each trained on transition matrices for individual layers of the metrical grid.

Distance metric: the Euclidean distance between generated samples and the nearest score in the original dataset. It considered pitch distances weighted by the layer of the metrical grid.

Evaluation metric: mean absolute interval (MAI) and pitch distribution (measured using KL divergence).

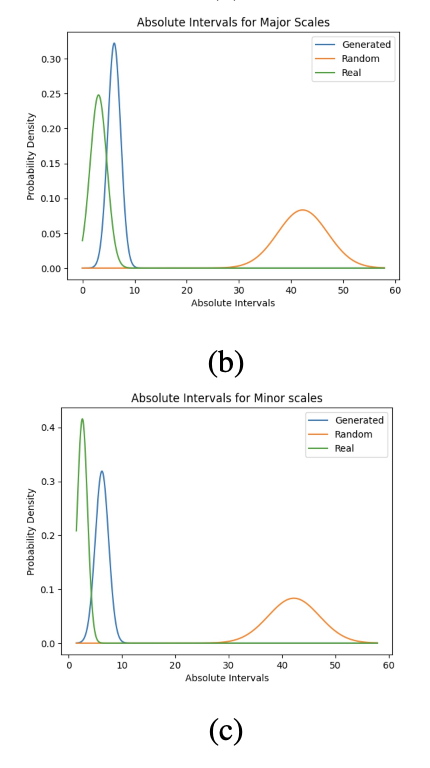

The MAI metric showed that our generated results align more closely with the original dataset's interval distribution but tend to produce larger intervals.

Pitch distribution analysis via KL divergence indicated that our model statistically replicates musical pitch characteristics well, though it doesn't guarantee the quality of the generated music from a human listening perspective.

In the final phase of our laboratory, we worked on the generation of complete 8-bar musical pieces, encompassing rhythm, melodies, and musical structure, including characteristics like score repetitions.

Our model consisted of four key steps: template generation, guide tone generation, regularization, and elaboration.

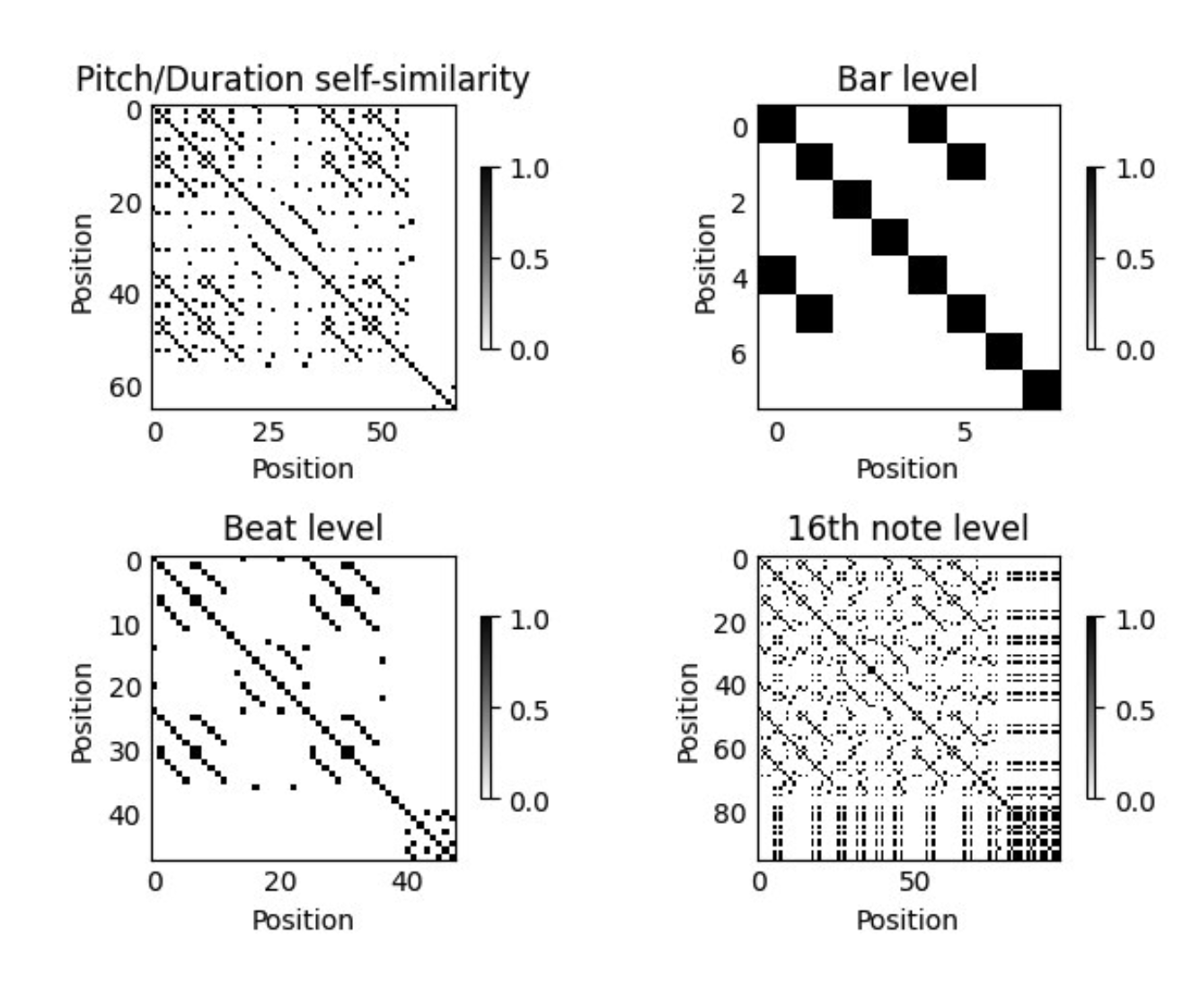

For the evaluation we used the same metrics as in the lab 2 and our results were quite satisfying. This time we also implemented self-similarity matrices to qualitatively analyze the repetition patterns of our musical pieces.

These matrices demonstrated that we successfully imposed the repetition pattern on our pieces.

Here is an example of our music generation.

Domain knowledge is important: delving into music theory, a new realm for me, presented a challenge in comprehending the domain and selecting the most suitable ML techniques.

ML application process: I noticed how important is to methodically track each step in the process. It's not solely about the model or technique; equally crucial are decisions regarding data encoding and the choice of evaluation metrics.

Team: Davide Romano, Barbara Ruvolo, Jeremi Do Dinh, Mengjie Zou

Author: Davide Romano